The Clockwork Universe: Determinism Through the Ages of Physics

Albert Einstein once said that “Everything is determined, the beginning as well as the end, by forces over which we have no control. It is determined for the insect, as well as for the star. Human beings, vegetables, or cosmic dust, we all dance to a mysterious tune, intoned in the distance by an invisible piper.”

The origin of the universe and the method by which events are determined is a question that has simultaneously transfixed and eluded humankind for most of our short history. This article will discuss how the scientific community’s conception of determinism evolved through history, with a specific focus on the comparison between the Newtonian and Heisenbergian approaches to examining cause and effect relationships (this will be very oversimplified because I am a Physics 11 student, but bear with me).

Newtonian Physics and Determinism

In his acclaimed work Principia, Isaac Newton laid out the fundamental premise of Newtonian physics and the deterministic principles which it entails: that all events are a result of past events and a defined set of physical laws. By Newton’s own accord, “It is inconceivable that inanimate brute matter should, without the mediation of something else, which is not material, operate upon and affect other matter without mutual contact.” In other words, under this model of thought, humans exist within what is known as a clockwork universe, in which everything from particle interactions to celestial mechanics is determined by prior causes.

The deterministic conclusions of these findings were first explicitly analysed by Pierre-Simon Laplace in the early 19th century. Laplace constructed a thought experiment known as Laplace’s Demon, in which there is a hypothetical being with a complete knowledge of the position and momentum of each and every atom in the universe, who is thus able to predict the future with total certainty through making calculations based off of the laws of Newtonian physics. In his words:

We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.

Inherent to his theory, therefore, is the idea that events can be predicted on the sole basis of linear causality. While it is now acknowledged that time does not operate in such a linear manner, due to special relativity, this complicates determinism rather than undermining it entirely. Interestingly, Laplace’s articulations would suggest that humans have no meaningful choice or control over their outcomes; because humans are composed of atoms that are, under Laplace’s theories, subject to the same physical laws as planets and galaxies, we would likewise be controlled by cause and effect relationships. There is some neuroscientific evidence for this postulation: in the highly influential Libet experiment of 1983, a group of researchers at the University of California found that they were able to predict the volitional acts of subjects with high accuracy. Though the study focused on subjects flexing their wrists and not a more deliberative choice, these results do imply that the premises of free will are questionable. Other scientists, such as Daniel Wegner, have made similar hypotheses about the illusory nature of free will.

Figure 1: Norton’s Dome

While Newton’s theories have been synthesized and developed upon by decades of scientists, the original conclusions still bear weight when considering issues of determinism. For instance, in 2003, John Norton proposed a thought experiment now known as Norton’s Dome, in which a ball is sitting at the top of a dome, as shown above in Figure 1. The variable h, or the vertical displacement from the apex to the point it reaches, is defined by the equation shown, where r is the geodesic (shortest possible) distance between the apex and that point. The experiment goes as follows: either it will stay at the apex forever, or it will slide down spontaneously. Norton argues that it is possible for the latter option to occur within the framework of Newtonian physics, which seems unintuitive given Newton’s First Law. That is, the question becomes, what external force would cause the ball to roll down the dome? The takeaway here is that while Newtonian physics appear simple in some respects, they may be able to facilitate a broader understanding of causality and randomness.

Objections to Linear Causality

There are several salient grievances with Laplace’s formulation of linear causality and determinism. One common objection is the idea of chaos theory, or the idea that the outcome of most systems is subject to a degree of disorder or entropy. However, this suggestion often relies on what's known as the butterfly effect, or in this context, the idea that chaos is a result of systems being sensitive to microscopic discrepancies in initial conditions. While this makes predicting outcomes over a long period of time more difficult because measurements must be incredibly precise (But how precise can these measurements be? More on this later!), its foundations are still in essence compatible with determinism.

This misunderstanding has carried through much of scientific literature. For instance, in his book Chaos, James Gleick misapprehends the meaning of Laplace’s Demon a bit, saying that humans will never have the capacity to predict the future. The problem is: the demon is not intended to be a reflection of the current or even future ability of humans to predict events, because it's implausible for us to have even a near complete understanding of all causality. Instead, it's a thought experiment aimed at proving that events are a product of previous factors, regardless of whether scientists will ever have a comprehensive knowledge of all cause and effect relationships. As Christof Koch sums up in his book Consciousness: Confessions of a Romantic Reductionist, “What breaks down in chaos is not the chain of action and reaction, but predictability. The universe is still a gigantic clockwork, even though we can’t be sure where the minute and hour hands will point a week hence.”

Another objection, though slightly tangential to the concept of determinism itself, says that Laplace’s demon could never exist, because there is a defined limit to the computational capacity that can exist within the universe. This was introduced by Seth Lloyd in his paper Ultimate Physical Limits to Computation, which calculates that the universe could only have performed 10^20 basic operations thus far, and that to calculate events with certainty would require more computational spacetime than exists. I won't pretend to remotely understand the math here (pre-calculus 12 is plenty demanding for me), but it's interesting stuff!

Modern Physics and the Debunking of Determinism

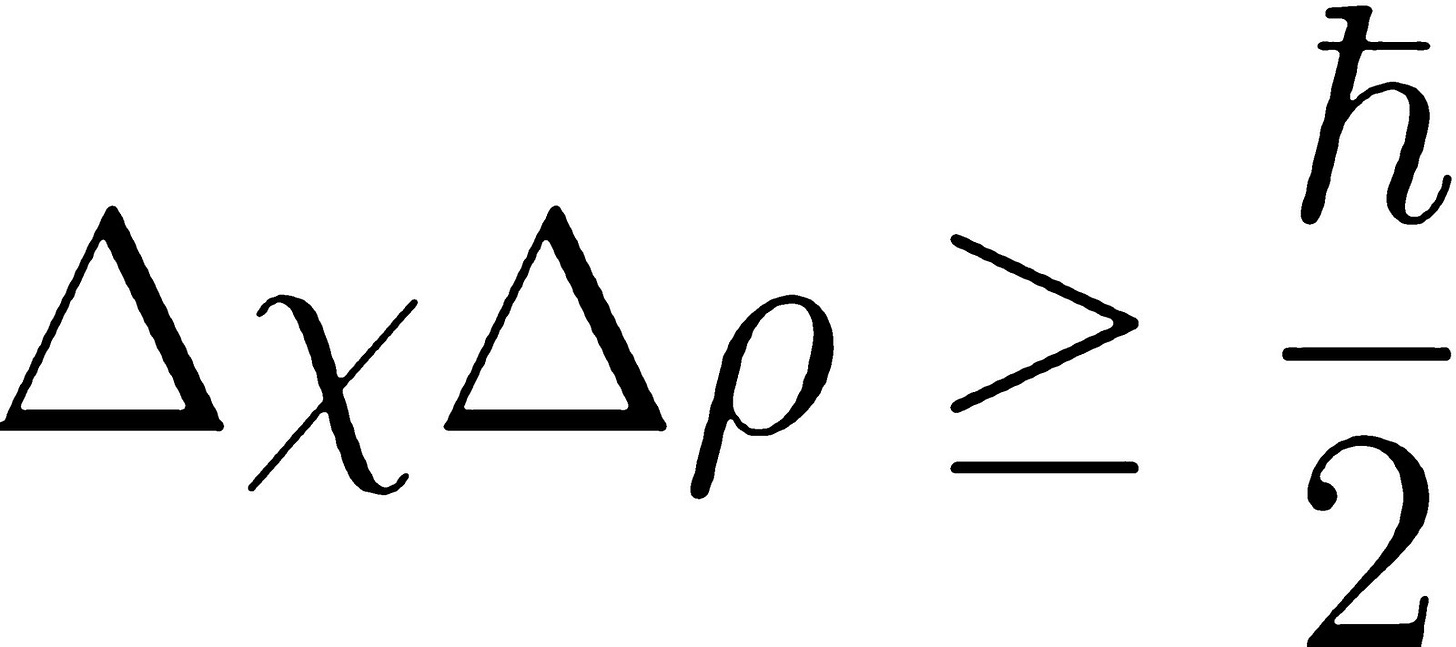

The more refined and relevant objection to Laplace's construction is the idea of quantum uncertainty, first established by physicist Werner Heisenberg in 1927. The core idea is that the more accurately one measures position, or delta x in the equation below, the less accurately one can measure momentum, or delta p (the special character ħ refers to Planck’s Constant divided by 2𝜋). In other words, there is a limited extent to which accurate measurements can be made on a quantum level.

Figure 2: Heisenberg’s Uncertainty Principle

The consequence for our understanding of determinism is very significant. It is no longer simply that humans will never have a complete knowledge of causality; it is that this knowledge may not exist because position and velocity are ambiguous measurements to begin with. This is what underlies the Copenhagen Interpretation, and within it, the idea of wave function collapse, referring to the probabilities of a system’s outcome reducing into one actual outcome at the point when the superposition of particles similarly reduces into one position.

There is some debate as to whether probabilistic quantum behaviour is compatible with the predictable behaviour that is asserted by classical physics. It seems intrinsically contradictory that singular outcomes can be predicted based on a set of variables if it is true that these outcomes are probabilities and not certainties. By that standard, quantum indeterminacy threatens the premise of determinism fundamentally. Some have suggested that the randomness of quantum mechanics is a justification for the conscious experience of free will, citing molecular indeterminacy in the context of neural chemistry. Heisenberg himself alludes to this interpretation in his delightful book, Physics and Philosophy. This explanation is usually paired nicely with the theory that there is a "higher level" of consciousness which transcends the chemical interactions of cells and molecules (Ben Shapiro sums this up rather neatly in a debate with Sam Harris). I think I'm inclined to find this rationalisation of free will quite attractive—who doesn't like freedom? But logically, while quantum uncertainty may broaden the possibility for the existence of free will, it can hardly be considered certifiable evidence for its existence. Considering Russell's Teapot and all, the more sensible conclusion might be to default to the assumption that free will does not exist.

Conclusions

The question of whether our universe is deterministic has perplexed generations of physicists, neuroscientists, and philosophers alike, and still remains unresolved. On some level, the belief that the world is predetermined (or random, for that matter) is deeply unsettling to most people, myself included. It seems more meaningful to live in a world within which we have the capacity to make free choices, and both causal determinism and randomness appear to threaten the foundations of who we believe ourselves to be. In my personal experience, watching The Minority Report has induced many fatalistic existential crises. To some extent, though, I wonder if our collective obsession with proving free will stems from the psychological discomfort of being deterministic slaves, and the nihilistic connotations that accompany that thought. (Free will is also a convenient prerequisite to Western glorification of individualism and free market capitalism, but that’s an aside.)

Still, I don’t think philosophical departures from free will necessarily have to erode humanity’s importance. If we liberate ourselves from the anthropocentric notion that human meaning is solely defined by our ability to act independently from nature, we can potentially embrace a more holistic comprehension of human civilization in relation to our scientific origins. As Carl Sagan puts it in Cosmos, “We seek a connection with the Cosmos. We want to count in the grand scale of things. And it turns out we are connected—not in the personal, small-scale unimaginable way that the astrologers pretend, but in the deepest ways, involving the origin of matter, the habitability of the Earth, the evolution and destiny of the human species.” Even if we do not have free will in the sense that we imagine, being a part of a (somewhat) deterministic universe could be viewed as part of a more meaningful approach to evaluating human worth.

Bibliography: https://docs.google.com/document/d/1fco68qvZK2pvTbT9Q3mTm_DTAbkYkmRu776C1pSGMmc/edit?usp=sharing